Your supervisor bursts into your office and excitedly tells you about this great game changing thing he has seen on LinkedIn — Object Detection! Now he wants an Object Detection model in production within two days. In this post, I’ll show you how you manage it in one.

Note: You can find example code and a notebook in this repository. It was mainly inspired by Philip Schmid’s notebook on the topic.

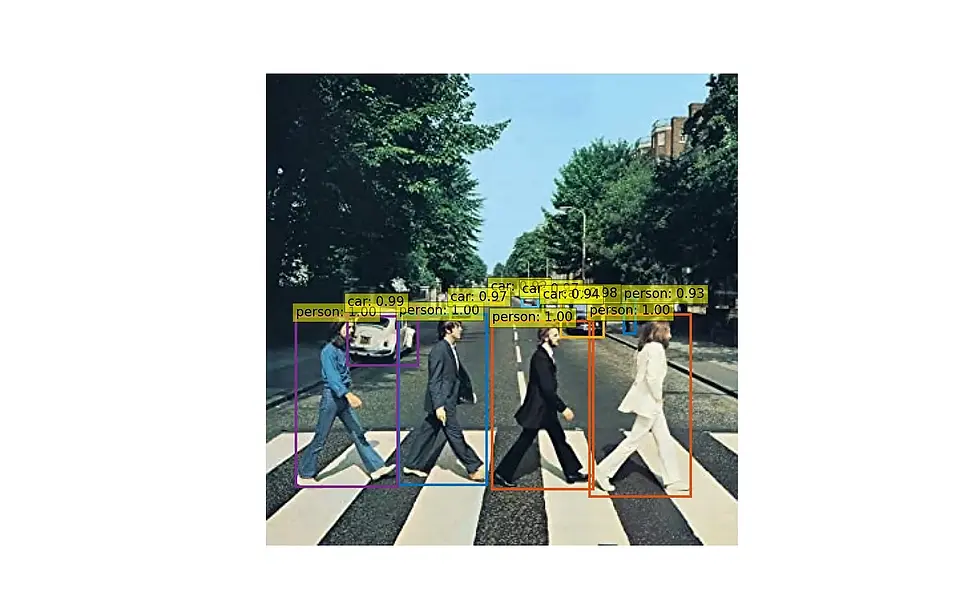

Object Detection Let us first look at the general task: Object Detection is the process of finding instances of various object classes in an image and mark them (in general with bounding boxes). This is one of the fundamental computer vision tasks and chances are high that you will encounter it sooner or later in your ML Computer Vision career.

Object detection in a city street

Requirements What does your supervisor expect of you? - detect standard classes like persons, cars, etc. - have a stable running solution - have it accessible from a backend - do not take longer than 2 days - have an acceptable accuracy

What does he not expect of you? - reach state-of-the-art results - fine-tune it on your own data - run it in real-time (≥ 24fps)

Knowing this, here is the plan of attack: Choose a pre-trained model ⇾ Deploy the model ⇾ Send data and get results ⇾ Job Done!

Sounds too easy? Then bear with me:

Choose a pre-trained model There are thousands of pre-trained object detection models available on the web. But we need to keep in mind, that our focus lies on easy deployment and not so much on accuracy. A good idea is to go with a popular framework and one of the most successful Deep Learning frameworks of the last years is Hugging Face. Its secret to success is in the ease of use and a really engaged community with thousands of model weights and demos on their Hub. Since some months they expanded from their natural grounds of Natural Language Processing to various modalities, including Computer Vision. And — what a coincidence — they happen to include Transformer based end-to-end object detection models in their transformers library: DETR and YOLOS (as of this writing). For our project it does not really matter which one we choose and we will pick YOLOS.

Deploy the model Many companies struggle with the topic of fast and reliable model deployment. I will show you how simple it can be.

All we need for this example is AWS SageMaker. Since a few months, they are offering SageMaker Serverless Inference, which lets you deploy a model without having to worry about the infrastructure behind it and only costs you while running inference.

So how do we get our Hugging Face YOLOS model into SageMaker?

The first step, is to upload our model weights to an S3 bucket. We get the weights from here: hustvl/yolos-tiny You can just clone the whole repository (git clone https://huggingface.co/hustvl/yolos-tiny) and then upload it to an S3 bucket.

Next up is creating a HuggingFaceModel object:

huggingface_model = HuggingFaceModel(

model_data=s3_location

sagemaker_session=sess,

role=iam_role,

transformers_version=”4.17",

pytorch_version=”1.10",

py_version=’py38',

)Once you have the model object, you can define a serverless configuration and then create your endpoint, by calling .deploy() on the model. This will return a predictor object, which you can later use to send data to the endpoint.

serverless_config = ServerlessInferenceConfig(

memory_size_in_mb=4096,

max_concurrency=10,

)image_serializer = DataSerializer(content_type='image/x-image')yolos_predictor = huggingface_model.deploy(

endpoint_name=”yolos-t-object-detection-serverless”,

serverless_inference_config=serverless_config,

serializer=image_serializer

)Note: The YOLOS model was only added to transformers in release 4.20.1. When I created this example, I needed to create my own inference script for the endpoint to work correctly. You can find it in the repository under code/inference.py.

Send data and get results We’ll go through this step real quick. Using the above predictor we just need to pass an image path:

res = yolos_predictor.predict(data="example_resized.jpg")The result we get back is a dictionary that contains prediction probabilities and corresponding bounding boxes for detected objects. In the repository you can find code for visualization of the results like so:

Job done! 🎊 Congratulations 🎊 🥳

If you have further questions about the code or the process, feel free to check out the repository: https://github.com/kartenmacherei/yolos-sagemaker-serverless or reach out to us.